Knowledge editing for large language models can offer an efficient solution to alter a model’s

behavior without negatively impacting the overall performance. However, the current approach

encounters issues with limited generalizability across tasks, necessitating one distinct editor for each task,

which significantly hinders the broader applications. To address this, we take the first

step to analyze the multi-task generalization issue in knowledge editing. Specifically,

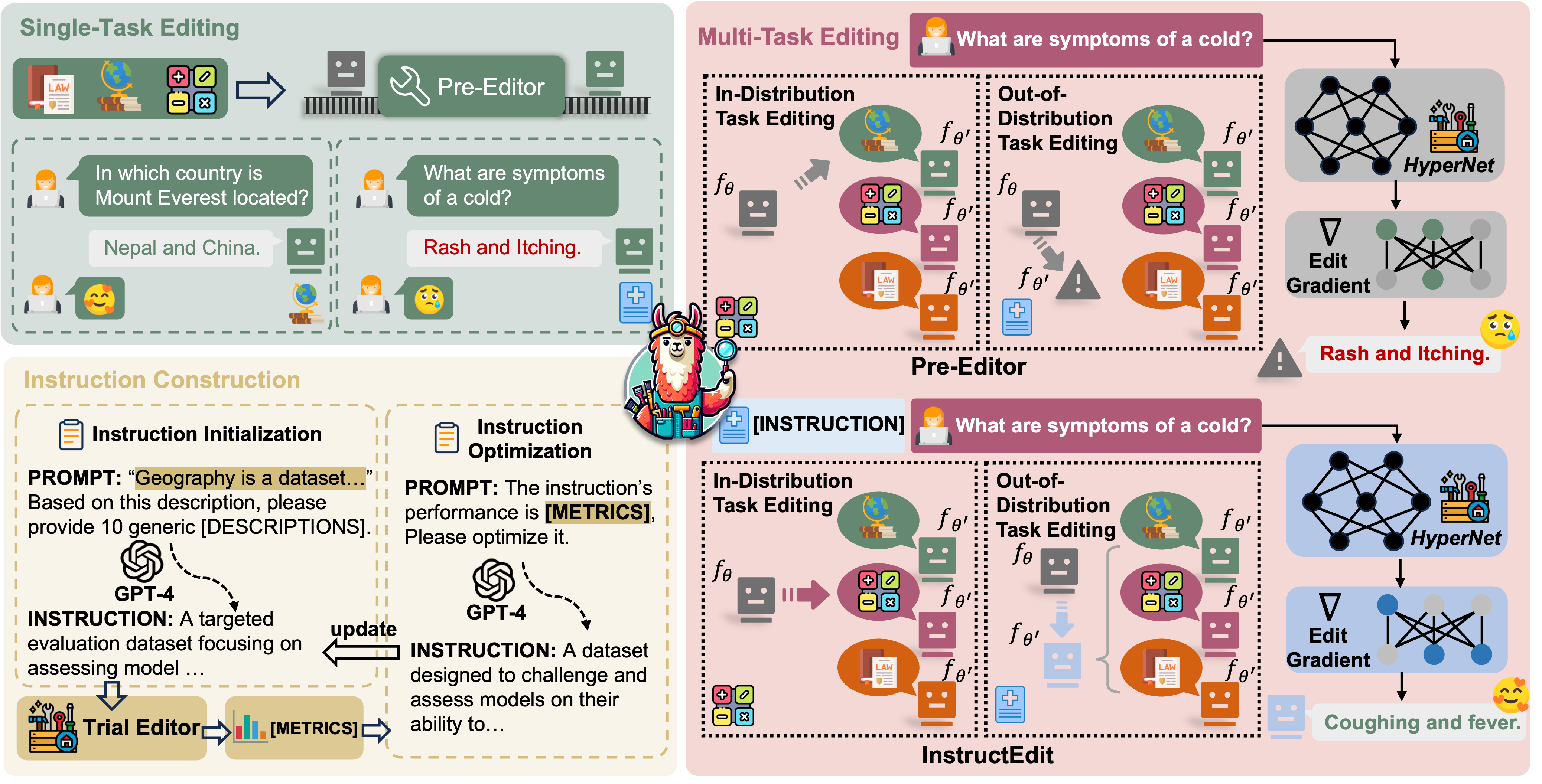

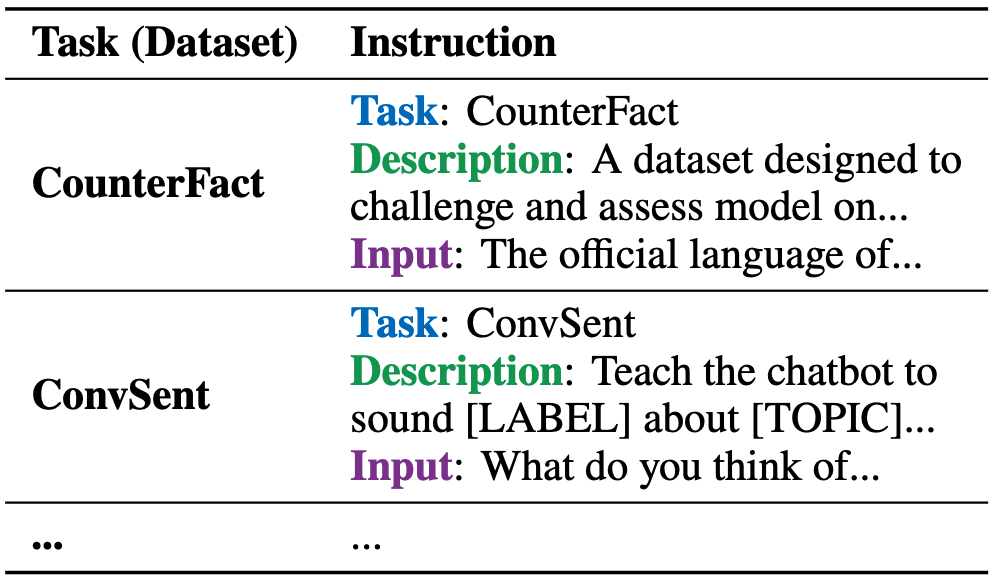

we develop an instruction-based editing technique, termed InstructEdit, which

facilitates the editor's adaptation to various task performances simultaneously using simple instructions.

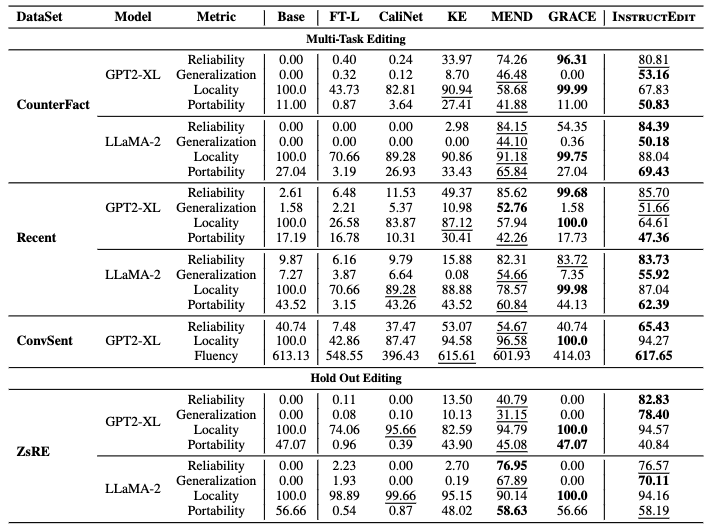

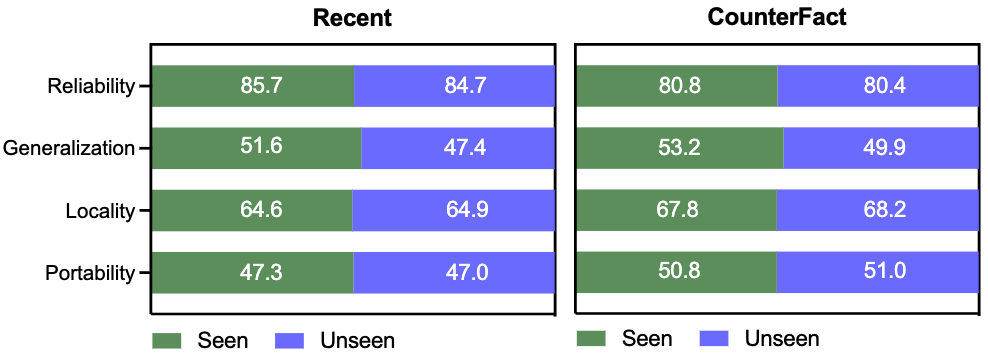

With only one unified editor for each LLM, we empirically demonstrate that InstructEdit can improve the editor's control,

leading to an average 14.86% increase in Reliability in multi-task editing setting. Furthermore, experiments involving holdout

unseen task illustrate that InstructEdit consistently surpass previous strong baselines. To further investigate the

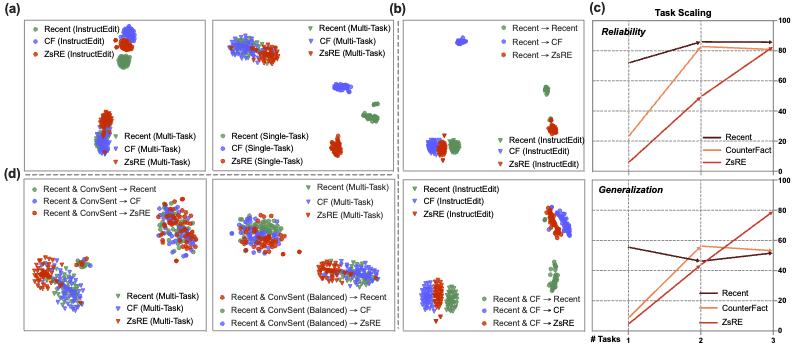

underlying mechanisms of instruction-based knowledge editing, we analyze the principal components of the editing gradient directions,

which unveils that instructions can help control optimization direction with stronger OOD generalization.

InstructEdit enhances the Multi-Task Editor by guiding it to choose the right "tool" for different tasks. Normally, the editor might not always pick the best approach on its own. With InstructEdit, when you give clear instructions, the editor gets better at understanding what you need and acts more effectively. Think of it as adding a smart assistant to the editor: you tell it what to do, and it does the job more efficiently and accurately .

InstructEdit enhances the Multi-Task Editor by guiding it to choose the right "tool" for different tasks. Normally, the editor might not always pick the best approach on its own. With InstructEdit, when you give clear instructions, the editor gets better at understanding what you need and acts more effectively. Think of it as adding a smart assistant to the editor: you tell it what to do, and it does the job more efficiently and accurately .